If you’ve ever needed to extract information from a website programmatically, you’ve likely heard of various tools and libraries. One powerful yet often overlooked tool is Helium, a Python library designed to simplify web automation and scraping. In this guide, I’ll walk you through the process of installing and using Helium to obtain information from a website, sharing some hands-on examples along the way.

Installing Helium and Dependencies

Before we start, you’ll need to install Helium and its dependencies. Helium relies on Selenium, a well-known web automation library, and ChromeDriver to control the Chrome browser. Here’s how to set everything up:

Install Helium

You can easily install Helium using pip. Open your terminal or command prompt and run:

pip install helium

Install Selenium

Helium uses Selenium under the hood, so you’ll need to install it as well:

pip install selenium

Download ChromeDriver

ChromeDriver is required for Helium to interact with the Chrome browser. Download the version of ChromeDriver that matches your installed version of Chrome from the ChromeDriver download page.

After downloading, extract the file and place it in a directory of your choice. You’ll need to specify this path in your script later.

Writing a Simple Scraper with Helium

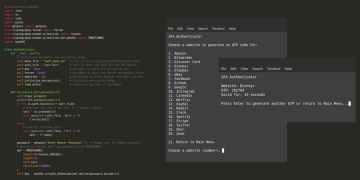

Let’s walk through an example of how to use Helium to scrape a website. Suppose we want to extract the titles and links of articles from a news website. Here’s how you can achieve that:

Import Necessary Libraries

First, import Helium and BeautifulSoup for parsing HTML:

#!/usr/bin/env python3 from helium import start_chrome from bs4 import BeautifulSoup

Start a Browser Session

Launch a Chrome browser instance and open the target website:

#!/usr/bin/env python3

from helium import start_chrome

from bs4 import BeautifulSoup

if __name__ == "__main__":

browser = start_chrome('https://news.ycombinator.com/')This will open the specified URL in Chrome.

Extract and Parse HTML

Get the page source and parse it with BeautifulSoup:

#!/usr/bin/env python3

from helium import start_chrome

from bs4 import BeautifulSoup

if __name__ == "__main__":

browser = start_chrome('https://news.ycombinator.com/')

html = browser.page_source

soup = BeautifulSoup(html, 'html.parser')Find and Extract Article Details

Use BeautifulSoup to locate and extract information from the page. In this example, we’ll extract article titles and their links:

#!/usr/bin/env python3

from helium import start_chrome

from bs4 import BeautifulSoup

if __name__ == "__main__":

browser = start_chrome('https://news.ycombinator.com/')

html = browser.page_source

soup = BeautifulSoup(html, 'html.parser')

articles = soup.find_all('tr', class_='athing')

for article in articles:

title_span = article.find('span', class_='titleline')

if title_span:

title_tag = title_span.find('a')

if title_tag:

title = title_tag.get_text()

link = title_tag['href']

print(f'Title: {title}')

print(f'Link: {link}')This script targets the span with class titleline inside each tr with the class athing. This is where the article titles and links are located.

Close the Browser

After you’ve extracted the needed data, close the browser session to free up resources:

#!/usr/bin/env python3

from helium import start_chrome

from bs4 import BeautifulSoup

if __name__ == "__main__":

browser = start_chrome('https://news.ycombinator.com/')

html = browser.page_source

soup = BeautifulSoup(html, 'html.parser')

articles = soup.find_all('tr', class_='athing')

for article in articles:

title_span = article.find('span', class_='titleline')

if title_span:

title_tag = title_span.find('a')

if title_tag:

title = title_tag.get_text()

link = title_tag['href']

print(f'Title: {title}')

print(f'Link: {link}')

browser.close()Handling Multiple Pages

Sometimes, you may need to scrape data from multiple pages. This involves navigating through the pages and repeating the scraping process. Here’s a more efficient way to handle pagination using go_to:

#!/usr/bin/env python3

from helium import start_chrome, go_to

from bs4 import BeautifulSoup

def scrape_page(url):

browser = start_chrome(url)

while True:

html = browser.page_source

soup = BeautifulSoup(html, 'html.parser')

articles = soup.find_all('tr', class_='athing')

for article in articles:

title_span = article.find('span', class_='titleline')

if title_span:

title_tag = title_span.find('a')

if title_tag:

title = title_tag.get_text()

link = title_tag['href']

print(f'Title: {title}')

print(f'Link: {link}')

next_link = soup.find('a', class_='morelink')

if next_link:

next_url = next_link['href']

next_url = f'https://news.ycombinator.com/{next_url}'

go_to(next_url)

else:

break

browser.close()

if __name__ == "__main__":

scrape_page('https://news.ycombinator.com/')This script will efficiently navigate through pages if a “More” link is available, using go_to to stay within the same browser session. Ensure that the URLs are constructed correctly based on the site’s URL structure.

Troubleshooting Common Issues

- Element Not FoundIf you can’t find the elements you’re looking for, ensure you’ve specified the correct selectors and that the elements are visible on the page. Use browser developer tools to inspect the HTML and adjust your selectors if necessary.

- Browser CompatibilityEnsure that your ChromeDriver version matches the version of Chrome installed on your system. Mismatched versions can lead to compatibility issues.

- Dynamic ContentFor dynamic content, make sure you’re giving the page enough time to load. Use wait_until to handle cases where content appears after an initial load:

-

from helium import wait_until, Text # Wait until the articles are loaded wait_until(lambda: find_all(Text('More')))

Conclusion

Helium provides a straightforward way to automate web interactions and scrape data. By setting up your environment, writing a few lines of code, and handling common issues, you can extract valuable information from websites efficiently. With Helium, you can focus on the data extraction itself rather than getting bogged down by the complexities of web automation.