In my last post, I dug into AdGuard, a robust ad blocker that tackles trackers and ads head-on. But how do you confirm it’s doing the job? Privacy tools need proof, not promises. Especially in 2025, with trackers adapting to Manifest V3 and beyond. Today, we’re crafting a Python script to audit your network for trackers, all from the terminal. No GUI, just raw data. It sniffs traffic, checks domains against a tracker list, and logs what it finds over a set time.

This is aimed at Linux users who know their way around Python and the command line. Think of it as a sibling to my ClamAV guide. We’ll use Scapy to capture packets for a duration you choose and match them against EasyPrivacy. Let’s get to it.

What You’ll Need

Set up your system with these:

- Linux: Tested on Linux Mint 22.01; most distros should work (tweak package names if needed).

- Python 3.x: Check with:

python3 --version

Install if missing via:

sudo apt install python3

- Scapy: For sniffing. Get it with:

sudo pip3 install scapy

If you receive build errors, install:

sudo apt install python3-dev

- Requests: To fetch the tracker list. Install with sudo pip3 install requests.

- Root Access: Sniffing demands sudo.

Spot your network interface (e.g., eth0, eno1, wlan0) by running the following command, we will need it later:

ip link

The Plan

Our script, tracker_audit.py, will:

- Pull the EasyPrivacy tracker list.

- Sniff HTTP, HTTPS, and DNS traffic for a set duration (e.g., 5 minutes).

- Log tracker hits and clean domains to both terminal and file.

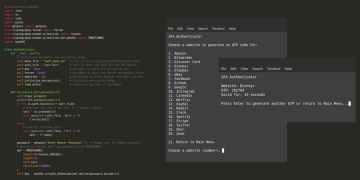

Running it over time gives us a solid sample of what’s hitting your network. Perfect for catching trackers that don’t show up in a quick burst. It’s lean, terminal-only, and fits my privacy-coding niche. See my 2FA Python script.

The Full Script

Save this as tracker_audit.py and make it executable: chmod +x tracker_audit.py.

#!/usr/bin/env python3

import argparse

from scapy.all import sniff, Scapy_Exception

import requests

import sys

import logging

from urllib.parse import urlparse

# Set up logging

logging.basicConfig(

level=logging.INFO,

format="%(asctime)s - %(levelname)s - %(message)s",

handlers=[logging.FileHandler("tracker_audit.log"), logging.StreamHandler()]

)

logger = logging.getLogger()

def fetch_tracker_list(url="https://easylist.to/easylist/easyprivacy.txt"):

"""Fetch and parse a tracker list from a URL."""

try:

logger.info("Fetching tracker list...")

response = requests.get(url, timeout=10)

response.raise_for_status()

trackers = {line.split("^")[0][2:] for line in response.text.splitlines()

if line.startswith("||") and not line.startswith("||*")}

logger.info(f"Loaded {len(trackers)} trackers.")

return trackers

except requests.RequestException as e:

logger.error(f"Failed to fetch tracker list: {e}")

sys.exit(1)

def extract_domain(packet):

"""Extract domain from HTTP request or DNS query."""

try:

if packet.haslayer("HTTPRequest"):

host = packet["HTTPRequest"].Host.decode(errors="ignore")

return host

elif packet.haslayer("DNS") and packet["DNS"].qr == 0:

qname = packet["DNSQR"].qname.decode(errors="ignore")

return qname.rstrip(".")

return None

except Exception as e:

logger.debug(f"Error extracting domain: {e}")

return None

def check_packet(packet, trackers):

"""Check if packet contains a tracker domain."""

domain = extract_domain(packet)

if domain and domain in trackers:

logger.warning(f"Tracker detected: {domain}")

elif domain:

logger.info(f"Clean domain: {domain}")

def audit_network(duration, interface, trackers):

"""Sniff network traffic and audit for trackers over a set time."""

try:

logger.info(f"Starting audit on {interface} for {duration} seconds...")

sniff(

iface=interface,

filter="tcp port 80 or tcp port 443 or udp port 53",

prn=lambda pkt: check_packet(pkt, trackers),

timeout=duration

)

logger.info("Audit complete.")

except Scapy_Exception as e:

logger.error(f"Sniffing failed: {e}. Run as root and check interface.")

sys.exit(1)

except KeyboardInterrupt:

logger.info("Audit stopped by user.")

def main():

"""Main function with argument parsing."""

parser = argparse.ArgumentParser(

description="Audit network traffic for trackers using Python and Scapy."

)

parser.add_argument(

"-d", "--duration", type=int, default=300,

help="Duration to capture packets in seconds (default: 300)"

)

parser.add_argument(

"-i", "--interface", type=str, required=True,

help="Network interface to sniff (e.g., eth0, wlan0)"

)

args = parser.parse_args()

if os.geteuid() != 0:

logger.error("This script must be run as root (e.g., sudo).")

sys.exit(1)

trackers = fetch_tracker_list()

audit_network(args.duration, args.interface, trackers)

if __name__ == "__main__":

import os

main()How It Works

Let’s walk through the guts of this.

1. Setup and Logging

We kick off with imports and logging to tracker_audit.log and the terminal. Timestamps keep it readable, and dual output means you won’t miss anything.

2. Fetching the Tracker List

fetch_tracker_list() hits easylist.to for the EasyPrivacy list. It parses these into a set of domains, handling failures with a clean exit if the request flops.

3. Extracting Domains

extract_domain() grabs domains from:

- HTTP: The Host header (e.g., jmoorewv.com).

- DNS: Query names (e.g., google.com).

This catches unencrypted traffic and DNS lookups, broadening our scope.

4. Checking Packets

check_packet() matches domains against trackers. Hits get a WARNING, clean ones an INFO. Simple, effective.

5. Auditing the Network

>audit_network() uses Scapy to sniff on your interface, filtering HTTP (80), HTTPS (443), and DNS (53). If it fails (wrong interface, no root), it tells you why.

6. Command-Line Interface

main() parses -d for duration (default 300 seconds, or 5 minutes) and -i for interface. Root check ensures it won’t run without sudo.

Running the Script

Here’s the drill:

- Find Your Interface:

ip link

Pick your active one (e.g., wlan0).

- Run It:

sudo ./tracker_audit.py -i wlan0 -d 600

This runs for 10 minutes (600 seconds). Default is 5 minutes with -d 300.

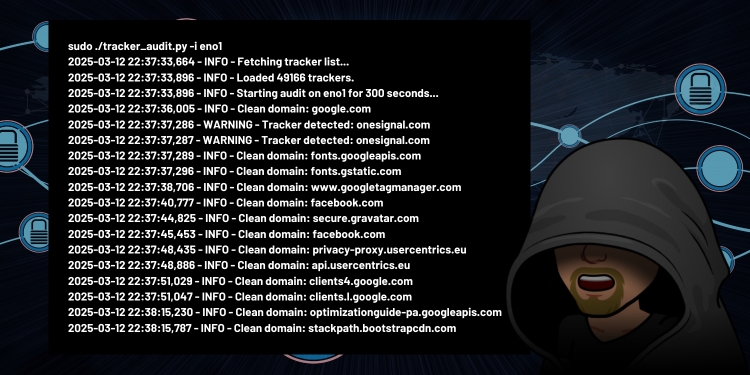

- Sample Output:

2025-03-12 10:00:00,123 - INFO - Fetching tracker list... 2025-03-12 10:00:01,456 - INFO - Loaded 15000 trackers. 2025-03-12 10:00:01,789 - INFO - Starting audit on wlan0 for 600 seconds... 2025-03-12 10:00:02,012 - INFO - Clean domain: jmoorewv.com 2025-03-12 10:00:03,345 - WARNING - Tracker detected: doubleclick.net 2025-03-12 10:10:01,678 - INFO - Audit complete.

Check tracker_audit.log for the full rundown.

Testing It Out

I tested this on my Linux Mint rig, first with AdGuard off. Over 5 minutes, trackers like doubleclick.net and googlesyndication.com popped up. With AdGuard on, it was mostly clean domains, though a few DNS queries slipped through (more on that below). Try it with your setup. Toggle your blocker or VPN (like Surfshark) and watch the difference. Time-based capture gives you a real feel for what’s happening.

Limitations and Caveats

- Root Needed: Sniffing’s a privileged task. sudo is non-negotiable.

- HTTPS Limits: No decryption here, so we’re stuck with DNS and unencrypted HTTP. Use mitmproxy if you want full TLS visibility.

- Interface Pick: Wrong choice (e.g., eth0 on Wi-Fi) means a crash. Verify with ip link.

- Install Hiccups: Scapy might need python3-dev if pip fails.

Conclusion

There you have it, a Python-powered tracker auditor that doesn’t mess around. No fluff, no GUI, just a terminal tool that sniffs your network for a solid chunk of time and tells you what’s sneaking through. Running this for 5 or 10 minutes gives you a real snapshot of your setup, whether you’re hardening a Linux box or tweaking your daily driver.

So, fire it up. Let it run while you grab a coffee, then dig into that tracker_audit.log. What’d it catch? Any trackers surprise you? This is your window into what’s really going on.